The Complete Guide to Node Js Streams

April 19, 2023

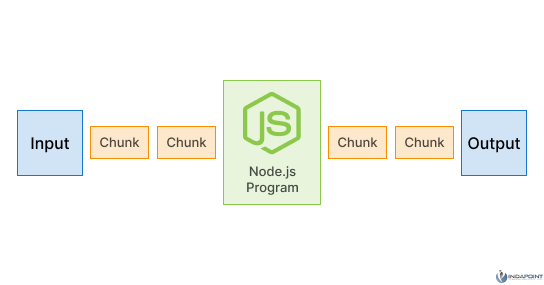

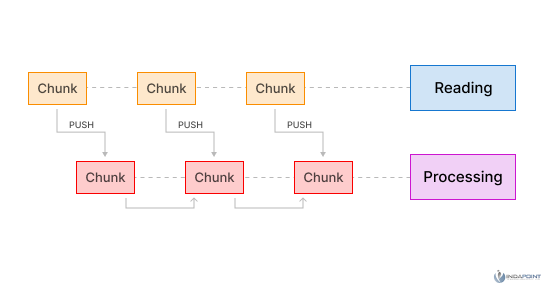

Streams of data act as a bridge between data storage and processing. Node.js streams are used to read and write data continuously. Streams are different from traditional methods that read or write data. Streams do not require data to be stored in memory and must be used to read data. To read a file, for example, it must be copied to memory. This adds to the application latency. On the other hand, applications that use streams will read a file in chunks and process each piece one by one.

Streams offer memory efficiency and performance advantages. A streaming website performs better than sites that load entire files before users can use them. Streams allow data to be loaded as needed. This guide will discuss streams in Node.js and show you examples of readable streams and writable streams.

What are Streams in Node Js?

Streams allow you to work with data in abstract ways. They can be read and written sequentially. Streams are a fundamental Node.js concept enabling data flow between inputs and outputs.

Streams are a vital concept in Node.js as they allow for efficiently handling large amounts of data. Streams process data in chunks rather than loading it all at once. Data is streamed from a source (like a file, network socket, or another file) to a destination (such as a response object or another file) without buffering. You can read and write streams from files, networks sockets, sinks, files, and stdin/stdout, among others data sources.

Types and Streams

The stream module comes with five streams. Docs

- Readable: Data is received from a readable stream.

- Writeable: Data is streamed to a writable stream. It is also known as a sink because it is the destination for streaming data.

- Duplex: A duplex stream implements both interfaces, readable and writable. A TCP socket is an example of a duplex stream, which allows data to flow in both directions.

- Transform – A duplex stream where the passing-through data can be transformed. The output will differ from the input. After changing data, it can be sent to a transform stream and read back.

- Pass-Through – The Pass-Through stream transforms data but doesn’t transform data once passed through. It is primarily used to test and provide examples.

The Advantages of Node Js Streaming

Many companies, such as Netflix and Uber, use Node.js. Leverage streams Node JS to improve their ability to manage, sustain, and perform their applications. These are some benefits of using Node Streams for your Node.js apps.

High memory efficiency

Many companies, such as Netflix and Uber, use Node.js. Leverage streams Node JS to improve their ability to manage, sustain, and perform their applications. These are some benefits of using Node Streams for your Node.js apps.

High memory efficiency

Streams can process large amounts of data simultaneously without storing everything. Streams can handle files and data too big to fit into memory.

Performance

Because streams can process data in chunks, they can be more efficient than any other method of reading or writing all data simultaneously. This is especially useful for real-time applications with low latency or high throughput.

Flexibility

Streams can handle a wide variety of data sources and destinations. This includes files, network sockets, and HTTP requests and replies. This makes streams a flexible tool for data handling in various contexts.

Modularity

Node streams are combined and piped to simplify complex data processing tasks. This makes code simpler to understand and maintain. Backpressure handling Streams can reduce backpressure by slowing down data sources if the destination cannot keep up.

This can prevent buffer overflows or other performance problems. Streams in Node.js can improve performance, scalability, and maintainability for large data processing applications. It is time for us to learn more about Node streaming and the use cases for Node Js streams.

Stream Events

All streams are Event Emitter instances. EventEmitters can be used to respond to and emit events asynchronously. Learn more about EventEmitters at Node.js. Events manage streams, read/write data, and handle errors.

Although streams are Event Emitter instances, it is not recommended to treat streams as events and only listen to them. The pipe or pipeline is a better option. These methods consume streams and handle events.

When stream consumption is controlled, stream events are helpful. An example is triggering an event when a stream ends or starts. For more information, take a look at Node.js’ Streams documentation.

Readable Stream Events

- Data is emitted when a stream outputs data chunks.

- Readable is emitted when data is read from the stream.

- End – When there is no data available, it will be emitted.

- Error is emitted when a stream error occurs, and an error object is to the handler. Unhandled stream error can cause the application to crash.

Writable stream event

The drain will emit when the internal buffer of the writable stream has been cleared. It is now ready for more data to be written into it.

It ends when all data have been written.

Error is emitted when an error occurs while writing data and an error object to the handler. An unhandled stream error can cause an application to crash.

Conclusion

Streams are an integral component of Node.js. Streams are often more efficient than traditional data management methods. Streams allow developers to create performant, real-time applications. Streams can sometimes be challenging to understand. However, you will learn more about them and use them in your apps.

This guide covered the basics of creating readable streams. Once you have learned the basics, advanced methods for working with streams are possible.

It is essential to have a stateful database that allows you to stream documents and collections to your database when building real-world applications.

It is essential to have a stateful database that allows you to stream documents and collections to your database when building real-world applications.