How to Exponentially Enhance Your Web Application in 2022

May 19, 2022

Scalability is an important aspect of developing a web app. Whatever type of project you intend to launch, you must be prepared for a surge of users and expect the system to handle it. Be especially cautious because your system might not be able to handle a big load if it is not flexible enough. To avoid this, it’s necessary to start thinking about application scalability before moving on to development.

Definition Of a Web Application

Scalability refers to your web application’s ability to handle an increasing number of people engaging with it at the same time. As a result, a scalable web application can handle one or a thousand users, as well as traffic spikes and drops.

Solutions for Server Scaling

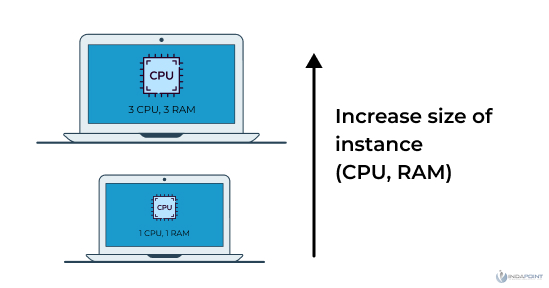

Scaling from the top (Scaling Up)

The web server will eventually run out of RAM and CPU as our user base expands, causing the programme to slow down, fail out, or crash. You might wonder if we can simply increase the CPU and RAM. We can do it. Vertical scaling, is often known as scaling up. The web development company used this technique to increase the size of an object. The architecture pattern remains the same; this is merely a technique of adding resources to an existing server or replacing it with a more capable server.

Vertical scaling has a limit since you will eventually exhaust the resource capacity of the machine you are utilising. It’s also more expensive to have one server with a higher capacity, such as 128GB RAM, than it is to have four 32GB RAM servers amounting to 128GB RAM – the incremental improvements in capacity are negligible when compared to the money invested. Another thing with this scaling strategy is that it still has a single point of failure, which means that if something goes wrong with the server, your application will crash because there is no redundancy or fallback option.

Scaling Horizontal (Scaling Out)

We looked into scaling up our server, but web development company california haven’t discovered a long-term solution, and our infrastructure isn’t fault-tolerant. Horizontal scaling is a distributed architecture pattern in which you add more servers that perform the same function. As your servers scale from 1 to ‘n,’ there is no limit to horizontal scaling. Because there are ‘n’ servers to fall back on if one fails, it adds resilience to an application’s infrastructure. It’s also less expensive than vertical scaling as you go up the scale.

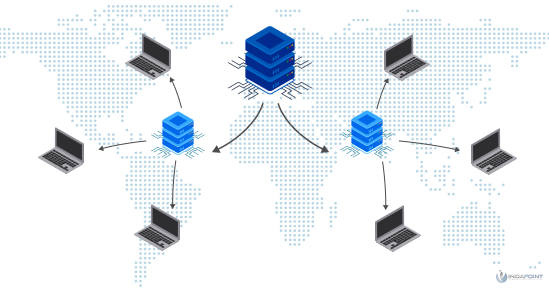

The distribution of traffic is one of the complexities that scaling out introduces. Load balancers are used to distribute traffic evenly between servers. Between the clients and the servers, the load balancer serves as an intermediate. It knows the servers’ IP addresses and can so route traffic from clients to the servers.

The problem of a single point of failure arises again when there is only one load balancer. To solve this problem, two or three load balancers are set up, one of which is actively directing traffic while the others serve as backups.

Storage for sessions

Because sessions are maintained in memory on the servers, having numerous servers will cause issues if your service uses them to identify users rather than token-based authentication. For example, when a user logs in, they may do so through server 1, which stores the session data; however, when the user/client makes a subsequent request, they may be routed to server 2, which does not contain the session data, forcing them to log in again.

To fix the problem, session data can be detached and stored in a different location, such as a Redis server or an in-memory data structure store. All of the servers’ sessions would be sent and received through the Redis server in this manner. We may add redundancy to the Redis server to improve our application’s resilience and avoid this potential issue.

If any other data has to be saved on the servers, it should be detached from its storage solution and all servers should have access to it.

The other option for resolving session storage is to switch to a token-based authentication system, which shifts the session load from the server to the client. Because a user’s session data is stored on their client, this method is eternally scalable, but maintaining all sessions in server memory can exhaust the server’s memory if the user base is large enough.

Queries in the Database Cache

It’s worth repeating since caching database queries is not only one of the most straightforward ways to scale your database, but it also has a significant impact on server scaling (s). Caching database queries does this by removing a significant amount of work from the stack.

Using a Content Delivery Network to serve static assets

The server does not update the static assets you send to the user. HTML pages and JavaScript files are two examples of static assets that are small in weight. Other static assets, such as images and videos, are huge resources that burden your server when sent over the network to a client every time a user requests a page. If your application is heavily reliant on static content, your initial scaling option should be to offload the task of providing static assets from your server(s) to a CDN.

A content delivery network (CDN) is a network of servers that are geographically scattered and collaborate to provide static material quickly. The content is served from an in-memory cache and from the network’s closest server to the client that requested it.

This is made simple by using cloud provider services like AWS’s S3 and Cloudfront. All you have to do is put up a basic configuration, and your static material will be provided much faster, providing your application with a significant performance boost that your users will appreciate.

Final Thoughts

Thanks to IaaS providers like AWS, scaling an application is now a breeze. We can still implement scaling solutions manually, but in most cases, the development team should have a lot of the hard lifting abstracted away so they can focus on the application layer.

Congratulations, your app now has the proper solutions in place to handle server and database traffic properly. It is not yet time to rejoice. We, humans, have short attention spans, therefore blazing speed and performance have become a must-have if you don’t want to lose your hard-earned consumers to that annoying copycat who couldn’t think of their idea.